This. Is. Science.

tl;dr

I wrote a thesis, but a Markov chain can rewrite it and make about as much sense as the original.

See also an updated version of this blog for a better approach.

Doc rot

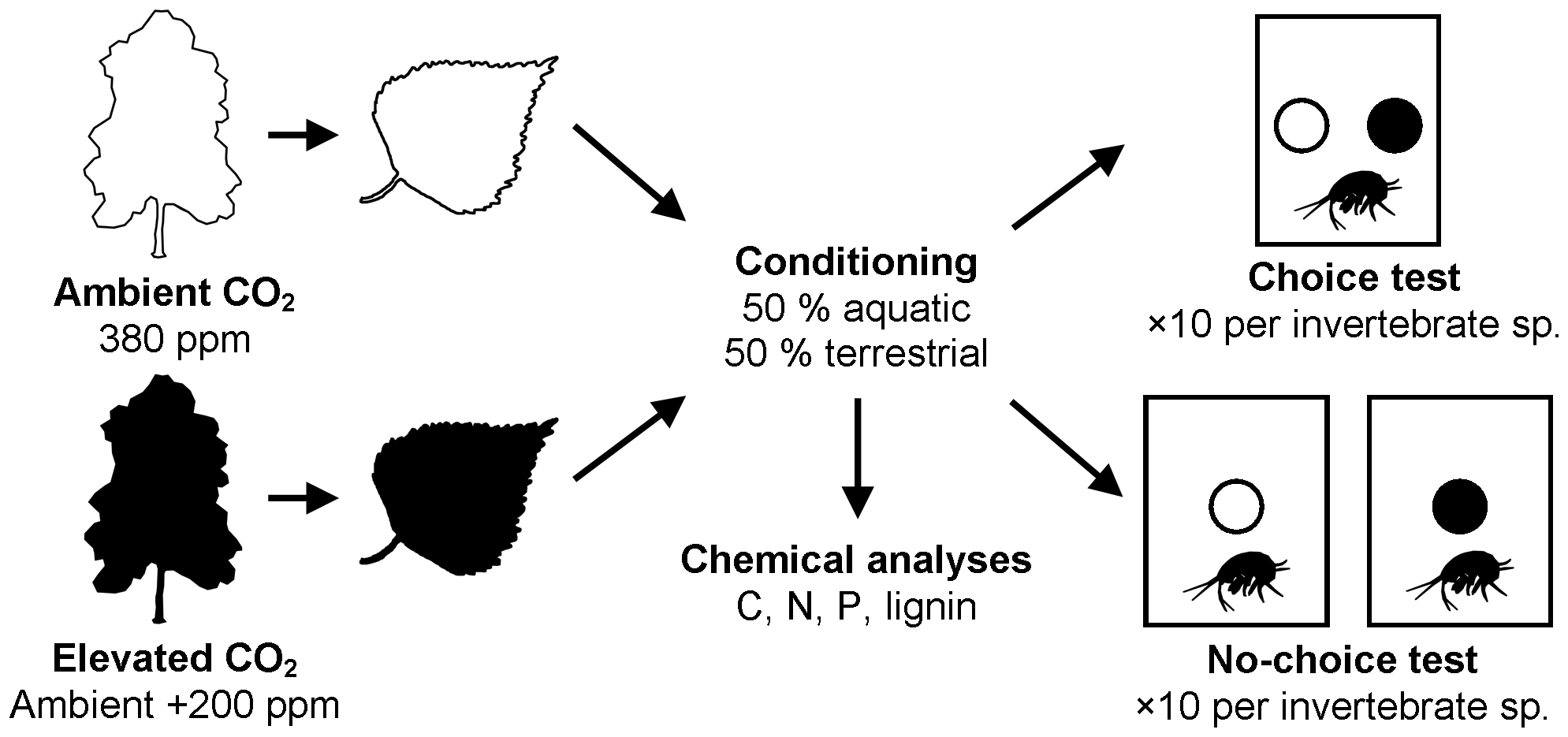

I wrote a PhD thesis in 2014 called ‘Effects of multiple environmental stressors on litter chemical composition and decomposition’. See my viva presentation slides here if you don’t really like words.

On graduation day, a stranger came up to me and, to paraphrase, said ‘you doctors should be proud of what you’ve achieved, you’re doing a great service’. I didn’t have the heart to tell him that I wasn’t a medical doctor. No, I was something nobler and altogether more unique: a doctor of rotting leaves.

You’re thinking: ‘gosh, what a complicated subject that must be; how could I ever hope to achieve such greatness?’ The answer is that you should simply take my thesis and use a Markov chain to generate new sentences until you have a fresh new thesis. The output will make probably as much sense as the original but won’t be detected easily by plagiarism software.

Heck, I’ll even do it for you in this post.

You’re welcome. Don’t forget to cite me.

Text generation

I’ll be using a very simple approach: Markov chains.

Basically, after providing an input data set, a Markov chain can generate the next word in a sentence given the current word. Selection of the new word is random but weighted by occurrences in your input file.

There’s a great post on Hackernoon that explains Markov chains for text generation. For interactive visuals of Markov chains, go to setosa.io.

Text generation is an expanding field and there are much more successful and complicated methods for doing it. For example, Andrej Karpathy generated some pretty convincing Shakespeare passages, Wikipedia pages and geometry papers in LaTeX using the ‘unreasonably effective’ and ‘magical’ power of Recurrent Neural Networks (RNNs).

Generate text

Code source

I’ll be using modified R code written by Kory Becker from this GitHub gist.

In a similar vein, Roel Hogervorst did a swell job of generating Captain Picard text in R from Star Trek: The Next Generation scripts, which is certainly in our wheelhouse.

Data

Because I’m helpful I’ve created a text file version of my thesis. You can get it raw from my draytasets (haha) GitHub repo.

Alternatively you could get the data from the {dray} package.

# Download package from github with

# remotes::install_github("matt-dray/dray")

# Load {dray} package and assign data to object

library(dray)## Still D-R-A-Yphd_text <- dray::phdWe’ll alter the data slightly for it to be ready for passing into the Markov chain.

# Remove blank lines

phd_text <- phd_text[nchar(phd_text) > 0]

# Put spaces around common punctuation

# so they're not interpreted as part of a word

phd_text <- gsub(".", " .", phd_text, fixed = TRUE)

phd_text <- gsub(",", " ,", phd_text, fixed = TRUE)

phd_text <- gsub("(", "( ", phd_text, fixed = TRUE)

phd_text <- gsub(")", " )", phd_text, fixed = TRUE)

# Split into single tokens

terms <- unlist(strsplit(phd_text, " "))Script

Read the markovchain package and fit a Markov chain to the text data.

# Load the package we need

library(markovchain) # install first from CRAN## Package: markovchain

## Version: 0.8.2

## Date: 2020-01-10

## BugReport: http://github.com/spedygiorgio/markovchain/issues# Fit the markov chain to the data

fit <- markovchainFit(data = terms)We’re going to seed the start of each ‘sentence’ (a sequence of n words, where we specify n). We’ll do this by supplying one of 50 unique values to the set.seed() function in turn. This seed then starts the chain within the markovchainSequence() function.

markov_output <- data.frame(output = rep(NA, 50))

for (i in 1:50) {

set.seed(i) # fresh seed for each element

markov_text <- paste(

markovchainSequence(

n = 50 # output length

, markovchain = fit$estimate

)

, collapse = " "

)

markov_output$output[i] <- markov_text

}Full output

This table shows 50 samples of length 50 that I generated with the code above, each beginning with a randomly-selected token.

Cherry-picked phrases

The output is mostly trash because the Markov chain doesn’t have built in grammar or an understanding of sentence structure. It only ‘looks ahead’ given the current state.

You can also see that brackets don’t get closed, for example, though an opening bracket is often followed by an author citation or result of a statistical test, as we might expect given the source material.

I’ve selected some things from the output that basically look like normal(ish) phrases. Simply rearrange these to build a thesis!

My favourites (my comments in square brackets):

| Generated sentence | Comment |

|---|---|

| Not all invertebrate species are among tree species | Literally true |

| Effect of deciduous trees may be appreciated | Well, they should be thanked for giving us oxygen and fruits |

| Species-specific utilization of Cardiff University | Humans inside, pigeons on the roof |

| Litter was affected by Wallace , Dordrecht | Who is this Dutch guy who’s interfering with my studies? |

| Bags permitted entry of stream ecosystem | I should hope so; I was investigating the effect of the stream ecosystem on the leaf litter stored in those bags |

| Permutational Analysis and xylophagous invertebrates can affect ecosystem service provision | My analysis will affect the thing its analysing? The curse f the observer effect. |

| Most studies could shift invertebrate communities | Hang on, this is the observer effect again; I thought I was studying ecology, not physics |

| This thesis is responsible for broad underlying principles to mass loss | Health warning: my thesis actually causes decay (possibly to your brain cells) |

| Carbon dioxide enrichment altered chemical composition | Aha, actually true |

Some other things that vaguely make sense:

- The response variables were returned to predict leaf litters

- shredder feeding was established for nutrient and urban pollution

- Leaf litter chemical composition are comprised of differing acidity in Ystradffin

- the no-choice situation with deionised water availability may reflect invertebrate feeding preferences

- ground coarsely using a wide spectrum of stream ecosystem functioning

- cages were already apparent

- Schematic of aquatic invertebrate species for identifying the invertebrate assemblages during model fitting

- Populus tremuloides clone under elevated CO2 had consistently been related to remove debris dams in woodland environments

- the need to account for microphytobenthic biofilms are particularly affected by the Linnean Society , and lignin concentration

- These findings suggest that the roles could not differ between time and bottom-up control of decomposition

- rural litter decomposition of litter layer of leaf litter will influence invertebrate communities

- the effects of carbon concentration in species’ feeding responses between tree species with caution given the 1970s , regardless of four weeks

- Results were visualised in the breakdown

- Measurements were in altered twig decay rates

- Litter was little work in decomposing leaf litter of litter resulted in a range of twigs , as a result in upland streams

- The basis of carbon compounds have influenced feeding preferences

- Annual Review of the physical toughness of rotting detritus altered chemical composition and woodlice Porcellio species

- Nitrogen concentrations and nitrogen transformations in both leaves grown under ambient CO2 levels of trembling aspen and invertebrate assemblage

So now you can just paste all this together. Congratulations on your doctorate!

Session info

## [1] "Last updated 2020-02-10"## R version 3.6.0 (2019-04-26)

## Platform: x86_64-apple-darwin15.6.0 (64-bit)

## Running under: macOS Mojave 10.14.6

##

## Locale: en_GB.UTF-8 / en_GB.UTF-8 / en_GB.UTF-8 / C / en_GB.UTF-8 / en_GB.UTF-8

##

## Package version:

## assertthat_0.2.1 base64enc_0.1.3

## BH_1.72.0.2 blogdown_0.12

## bookdown_0.10 cli_2.0.1

## colorspace_1.4.1 compiler_3.6.0

## crayon_1.3.4 crosstalk_1.0.0

## digest_0.6.23 dray_0.0.0.9000

## DT_0.11 ellipsis_0.3.0

## evaluate_0.14 expm_0.999-4

## fansi_0.4.1 farver_2.0.1

## fastmap_1.0.1 ggplot2_3.2.1

## gifski_0.8.6 glue_1.3.1

## graphics_3.6.0 grDevices_3.6.0

## grid_3.6.0 gtable_0.3.0

## highr_0.8 htmltools_0.4.0

## htmlwidgets_1.5.1 httpuv_1.5.2

## igraph_1.2.4.1 jsonlite_1.6.1

## knitr_1.26 labeling_0.3

## later_1.0.0 lattice_0.20-38

## lazyeval_0.2.2 lifecycle_0.1.0

## magrittr_1.5 markdown_1.1

## markovchain_0.8.2 MASS_7.3.51.4

## matlab_1.0.2 Matrix_1.2-17

## methods_3.6.0 mgcv_1.8.28

## mime_0.9 munsell_0.5.0

## nlme_3.1.139 parallel_3.6.0

## pillar_1.4.3 pkgconfig_2.0.3

## plotrix_3.7-7 plyr_1.8.4

## promises_1.1.0 R6_2.4.1

## RColorBrewer_1.1-2 Rcpp_1.0.3

## RcppArmadillo_0.9.800.1.0 RcppParallel_4.4.4

## reshape2_1.4.3 rlang_0.4.4

## rmarkdown_2.0 scales_1.1.0

## servr_0.15 shiny_1.4.0

## sourcetools_0.1.7 splines_3.6.0

## stats_3.6.0 stats4_3.6.0

## stringi_1.4.5 stringr_1.4.0

## tibble_2.1.3 tinytex_0.18

## tools_3.6.0 utf8_1.1.4

## utils_3.6.0 vctrs_0.2.2

## viridisLite_0.3.0 withr_2.1.2

## wordcloud_2.6 xfun_0.11

## xtable_1.8-4 yaml_2.2.1